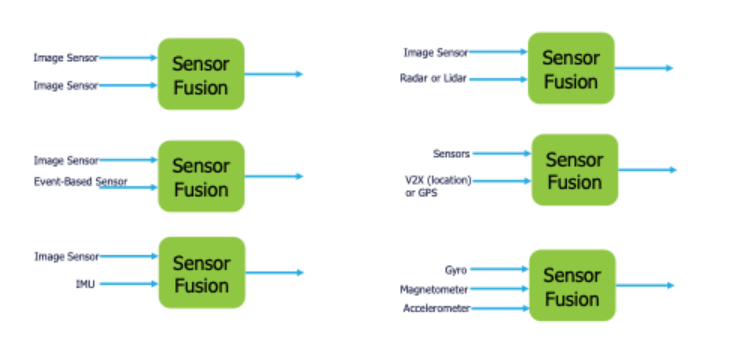

A key strategy of the fully autonomous vehicle is to be able to fuse inputs from multiple sensors, which is crucial for making safe and reliable decisions. However, it has proved to be much more difficult than initially thought. There are many issues that need to be addressed, including how to divide, prioritize, and ultimately combine different types of data, as well as how to construct internal processing for the vehicle so that it can quickly make decisions based on these different data types to avoid accidents. There is no single best practice for achieving this goal, which is why many automotive original equipment manufacturers have taken vastly different approaches. David Fritz, Vice President of Hybrid Physical and Virtual Systems, Automotive, and Aerospace Business at Siemens Digital Industries Software, said, "There are three main ways to approach this issue. One approach is to fuse raw data from multiple sensor sources before processing. Although this method can reduce power consumption, poor data from one sensor array may contaminate good data from other sensors, leading to poor results. In addition, the transmission of large amounts of raw data brings other challenges such as bandwidth, latency, and system costs The second method is object fusion, where each sensor processes data and represents the processing results of its specific sensor as an interpretation of the content it detects. "The advantage of this approach is that the results of airborne sensors can be seamlessly integrated. Infrastructure sensors, as well as sensors on other vehicles." "The challenge of this approach is the universal representation and marking of objects, so that they can share between different vehicles and infrastructure. The third option is a mixture of the first two methods. From the perspective of power, bandwidth and cost, this is the most attractive option we have found. In this approach, objects are detected by sensors but not classified. In this case, the point cloud of objects is transmitted to the on-board central computing system, which classifies (marks) the point clouds from different internal and external sensors. The discussion about the automotive ecosystem has just begun, and there are still many challenges to overcome. You need to figure out what items you own and when to use them, "said Frank Schirrmeister, Vice President of Business Development at Arteris IP. All forms are very different. If you're looking at LiDAR, there are some trendy distance maps. In the camera, it's RGB with a set of pixels. For thermal energy, there are other things. Even before you associate and integrate all these things, you need to understand these formats in some way. From an architectural perspective, this may result in the most ideal processing being on or near the sensor. Then, object correlation is performed between different positions. But you need to figure out the details, such as how hot the object is, how far the object is, and so on. This is the Venn diagram of these different sensors. They have a set of overlapping features, some of which are better than others. ” Sensor fusion is a rapidly innovating field, thanks to the continuous improvement of algorithms and the chip industry's deep understanding of SoC architecture. Markus Willems, Senior Product Manager at Synopsys, said: "One common feature of sensor fusion is the need for heterogeneous processing methods, as it requires a combination of signal processing - typically using DSP, artificial intelligence processing on dedicated accelerators, and control code using CPU. ”Depending on the type of sensor, different data types need to be supported. This includes 8-bit integer processing for image data, or 32-bit single precision (SP) floating-point numbers for radar processing, while artificial intelligence processing may require bfloat16, etc. Running different types of processors on a single chip requires a complex software development process, utilizing optimized C/C++compilers and libraries, as well as graphical mapping tools that support the latest neural networks, including transformers used in sensor fusion. Memory, bandwidth, and latency are key design parameters, and designers hope to see early availability of processor simulation models and SoC architecture exploration tools to examine hypothetical scenarios. ” Although sensor fusion has received a lot of attention in the automotive industry, it is also useful for other markets. Pulin Desai, Director of Product Management Group at Cadence Tensilica IP Group, said: 'We focus on the automotive field because image sensors will be equipped in cameras, radars, and lidars.' There may also be image sensors and IMUs in robot applications. '. There may be multiple image sensors, and you will integrate these things. Other sensors include gyroscopes, magnetometers, and accelerometers, which are used in various ways in many different fields. Although there is a lot of attention in the automotive industry, the same image sensors and radar sensors are also applied to household floor cleaning robots. Its structure may be very similar to drones. Any type of autonomous vehicle has this type of sensor. ” There is a large amount of data flow. Figuring out where to process this data is a challenge, partly because not all data is in the same format. Scherrmeister of Arteris said, "There is a classic case of edge computing. You need to decide how to balance the processing of the whole chain - from getting data from the simulation world to making decisions in your brain or interacting with drivers who use mixed models." You need to decide whether different items are inconsistent with each other. If so, what would you choose? Do you use an average value? Combining all these sensors is definitely a challenge. ” When it comes to actual data fusion, Siemens' Fritz has observed many methods. Some early companies that ventured into this field, such as NVIDIA, said, 'We can do a lot of things related to artificial intelligence. When sensor data is input, we can use our high-end GPUs to try to reduce their power consumption and then process it with neural networks.'. That's why a few years ago we installed a rack in the trunk that had to be water-cooled. Then you threw the LiDAR people in and they said, 'I know you can't pay $20000 for each LiDAR, so we're working hard to make it cheaper.' And then someone said, 'Hmm, wait a minute.'. The camera only costs 35 cents. Why don't we put a bunch of cameras to fuse these together? He said, 'This started with a brute force, almost brainless method a few years ago. This is the' I have raw LiDAR data 'method.'. I have raw camera data. I have radar, LiDAR, and camera. How can I connect these together? People have done some crazy things, such as converting LiDAR data into RGB. We have multiple frames because of distance information. Then we will run it through the simplest convolutional neural network to detect objects and classify them. That's the extent of it. But some people are still trying to do so In contrast, Tesla still relies primarily on camera data. Fritz said that this is possible because the functionality of stereo cameras, and even mono cameras, use disparity to determine depth in consecutive frames at a fixed time. That's why they say, 'Why do I need LiDAR? Because I don't have LiDAR, I don't have the problem of sensor fusion. It just simplifies things. But if the camera lens is covered with water or dirt, they have these issues to worry about. At the other extreme, if you rely entirely on LiDAR, I've seen a scene where you have a two-dimensional image of a person walking through the street, and the car thinks it's a real person. Why? Because of reflection. People don't know that LiDAR is doing all sorts of things, and it's hard to filter out these things.'

The fusion of different data types also depends on the type of sensors present. People are talking about early, mid, and late fusion, "said Cadence's Desay. It all depends on our customers and their system design, which indicates what type of problem they are trying to solve. We don't know about these things because there are stereo sensors that can do early fusion, or late fusion because your images and data have already been targeted, and you do late fusion. It could also be intermediate fusion, which is more like the choice of system suppliers, how much computation they want to do, how robust the information is, or what type of problem they want to solve. How difficult is this? Well, it depends on the type of nuclear fusion

Desai stated that another issue to consider is when to use artificial intelligence/machine learning technologies, especially in the context of their high attention, or whether classical DSPs are more suitable. I compared some of the things we have done in the past with what we are doing today. On certain issues, you have a certain method that can achieve high success rates with artificial intelligence. For example, when we did face and person detection in 2012 and 2013, we used classic computer vision algorithms, but the algorithms at that time were not very accurate. It was very difficult to achieve such accuracy. Then, when we turned to artificial intelligence, our performance in face detection and person detection was very strong. So now there is a very certain situation where you say, 'I want to do face detection, I can achieve 99% accuracy of what we call human accuracy, while artificial intelligence...' Intelligence can give me a 97% accuracy rate. "Why should I play things that are not good enough I will use this artificial intelligence because I know what it does and it provides the best accuracy. But in certain situations, such as when I am still struggling to solve a problem, I need to try different algorithms and apply them in my environment. I need to be able to do X, Y, or Z, I need flexibility. There, you continue to use digital signal processors to process these algorithms. ” In addition, many times when using AI engines, the data entering the AI engine must be preprocessed, which means it must be in a specific format. In specific data types, your artificial intelligence engine may say, 'I only do fixed points,' "Desai explained. So you may use programmable engines to do this. And once you put something into AI, you may not have much flexibility. Four years later, when something new appears, you may have to change it. There are many different factors. Essentially, if you are doing something very certain, you will know that you can achieve a very high performance rate, and you know it today. You may say, 'I'm going to use artificial intelligence to solve this problem today.'. Tomorrow, I may do that again. Then, I increase flexibility by using programmable engines. Alternatively, if I don't know what I need to handle it, I will still use classical algorithms to process it. Even if I have artificial intelligence, I still need to preprocess and preprocess the data, so I need to use classical DSP algorithms. ” Conclusion As automotive OEMs and system companies shift their computing architectures towards sensor fusion, experimentation will become a necessity. Siemens' Fritz believes that at this stage of development, the correct way to handle development is to hire or form small teams to do a large number of pilot projects. This could be a dozen or twenty people. For example, by 2026 or 2028, their goal could be to produce 300 prototype cars in a testing environment However, the position of each OEM today depends on the OEM, the time they have been engaged in architecture development, and how they wish to continue doing so. News from: Siemens Digital Industries David Fritz

Hot News

Hot News